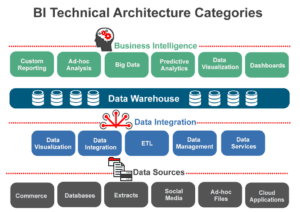

From around the 90s, the Enterprise Data Warehouse (EDW) has been the forerunner of nearly all large-scale business intelligence (BI) settings. Generally, a data warehouse (DW) is a centralized database that is used to report, plan and analyze summarized and relevant content data that is combined from various historical sources. An enterprise data warehouse employs technology to extract, transform, load (ETL) and transfer internal and external data sources to a centralized multipurpose data intelligence environment

A standard Enterprise Data Warehouse setting includes:

- Different storage and systems that give the data source

- Data integration and data staging by means of ETL (extract-transform-load) processes

- Processes that ensure high data quality and proper governance to make sure the DW achieves its objectives

- Applications and tools that help to profile data sources, supply the DW database and provide an analysis of the results

Traditionally, sources of data included flat files, relational database tables and internet services. But today other sources such as ERP, CRM, NoSQL, IoT, web log, social media and other big data sources have entered the fray.

As opposed to source OLTP databases that have normalized tables suitable for complicated queries and are modeled using E-R diagrams, the data warehouse database has been denormalized to allow for simple linkages which ensure quicker OLAP queries. It has data models that display more advanced snowflake’ or star’ schema. In addition, they are deemed to be time-variant and nonvolatile since they generate the same reports for diverse time periods. Today’s EDW and LDWs (logical data warehouses) have more volatility.

A Data mart is a smaller-sized, departmental data warehouse that utilizes subsets generated from the primary data warehouse(dependent) or it is created for a single business unit (independent). The ODS (operational data store) is a temporary operational data store (or ODS) that is commonly meant for client files.

A more in-depth view

Even if there are various data warehouse/business intelligence architectures for data staging and integration (such as ELT and its hybrids), the ETL methodology developed by Ralph Kimball features front room BI data stores that are sandwiched by presentation services.

Even if there are various data warehouse/business intelligence architectures for data staging and integration (such as ELT and its hybrids), the ETL methodology developed by Ralph Kimball features front room BI data stores that are sandwiched by presentation services.

Over and above the simple ETL versus ELT decisions, there is an extensive list of other issues to be considered, for example the software and hardware systems operating in the bottom (data warehouse/ extract-transform-load) middle (Online Analytical Processing) and top (Business Intelligence) levels of the EDW. To reach the threshold, EDWs typically utilize SQL-driven relational databases. But with data entering petabyte ranges, multi-core Unix servers, mainframes and Hadoop data nodes have become commonplace, together with NoSQL databases such as MarkLogic.

At the bottom level, vendor products manage ETL and associated data delivery issues such as data capture, replication and migration and diverse kinds of gradually-changing dimension updates.

In the middle level, the decision of whether to go with relational online analytical processing (ROLAP), or Multidimensional online analytical processing (MOLAP), is typically made. Under ROLAP, the data is relational –in which case similar results call for SQL queries-while in MOLAP the database is either multi-dimensional and holds component views (for example sales by time) set in arrays.

Therefore, multi-dimensional databases are quicker when doing analytic processing white relational databases are usually employed in EDWs-where they sustain the business intelligence tools in the top levels instead. Decisions to do with both normalization form and partitioning strategy are hence frequently made to quicken the relational database queries.

In the top level, data mining and business intelligence options are almost limitless and are determined by the analytical or reporting (predictive, diagnostic, prescriptive and so on) needs. In these situations, an outside data preparation service eliminates integration from the business intelligence layer significantly accelerates common visualization platforms.

EDW applications and advantages

Craig Mullins, a DW consultant wrote a piece on TechTarget where he outlined that an EDW could:

- Monitor, administer and enhance corporate performance.

- Check and reshape a marketing campaign.

- Reevaluate and improve the efficiency of operations and logistics.

- Optimize product development and management

- Interrogate, merge and access different information collected from diverse sources

- Supervise and boost client relationships

- Help in providing projections related to future growth, deliverables and requirements

- Tidy and enhance the quality of data in your organization

Certain industry cases indicate that DWs and EDWs are employed to:

- Evaluate call, click and other consumer habits

- Identify insurance fraud or determine rates

- Review treatment results and give recommendations about drug therapies

- Supervise goods shipments and inventories

- Check equipment/device health and service status

- Make promotion and pricing decisions more efficient

- Anticipate criminal activities and thwart terrorist activities

- Rationalize staffing, fleet and corporate facilities

One main technical advantage of EDWs is their segregation from operational procedures in production transactions and applications. Mullins clarified that conducting analysis and queries in the enterprise data warehouses provide a useful method of looking at the past without influencing everyday business computing. The result is increased efficiency and greater profitability.

In addition, from a financial perspective, the EDW is a relatively good deal when one thinks of data delivery models, particularly when compared to less stable, open or governed alternatives such as Hadoop, data lakes and appliances. And due to competitive talent and tool markets, entry barriers have been lowered for people who thought implementing EDWs was too costly or complicated.

How EDW evolved

The EDW came about due to the convergence of infrastructure, opportunity, capacity and the need to turn transactional data into information-and all these elements have increased rapidly in the last two decades. The evolution of related information technologies caused a lot of businesses to change or break up in order to create room for data-based rules. Processes alternated between simple and complex and data could reduce in size or grow in a dynamic enterprise environment.

Today, in the age of big data, numerous additional targets and sources have emerged. There are well-documented problems of data veracity, volume, velocity, value and variety piling pressure on conventional EDWs. Such ideas and their outcomes are causing changes when it comes to the architecture of Enterprise data management, from earlier models such as the operational data store to modern ones like the logical data warehouse (LDW), data lake and the Enterprise Data Hub (EDH), which were created to adapt to modern-day analytic needs and data stores.

Consequently, some data management professionals now regard EDWs as legacy architecture that can still handle regular workloads related to reports, analytics and queries.

© Reach Marketing LLC 2018 All Rights Reserved.